Bubbles | On Unpredictability and the Work of being Human | Something Entertaining

In This Issue: * Bubbles. Are We? Aren't We? Read more below for my current take... * On Unpredictability and

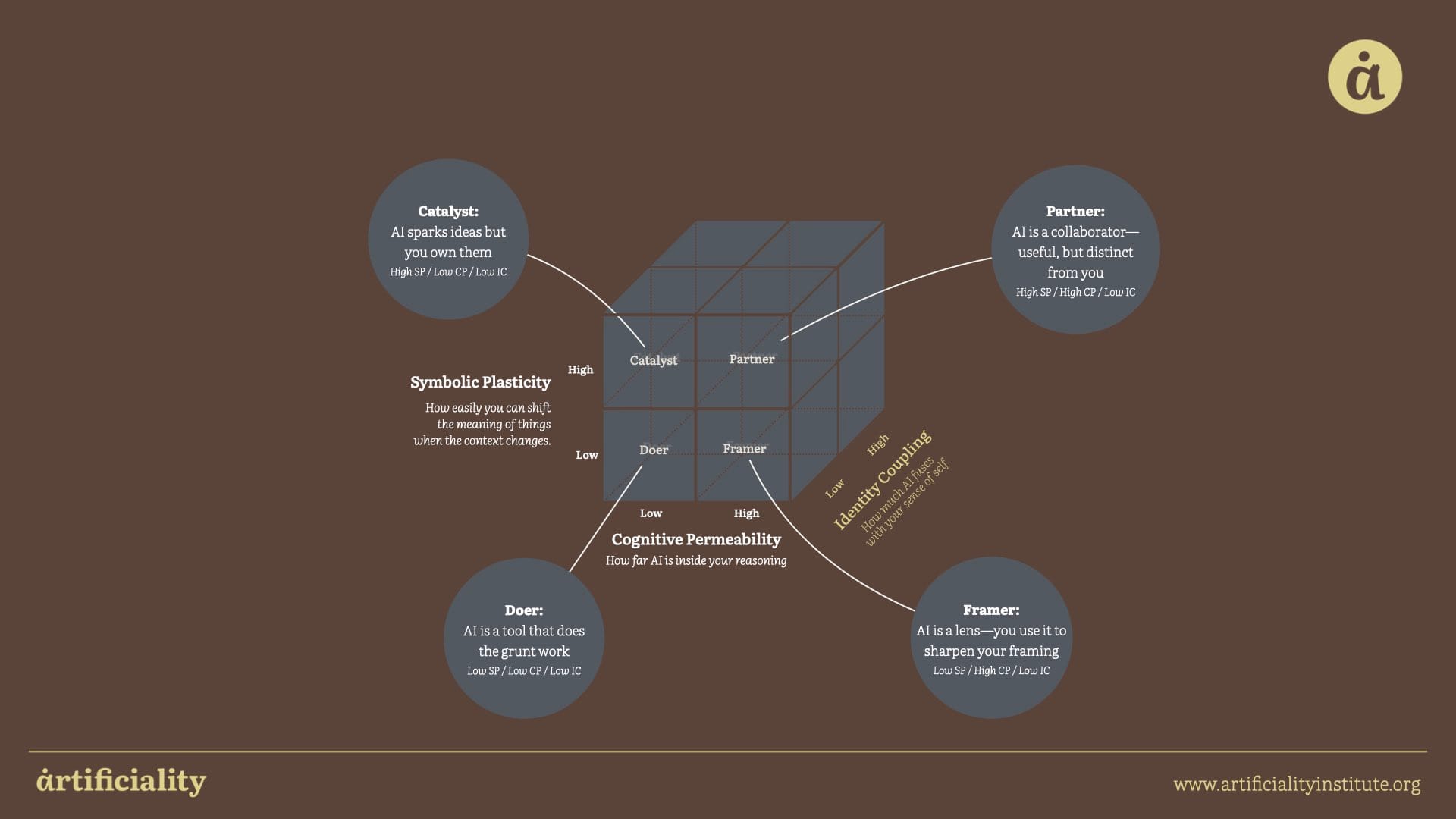

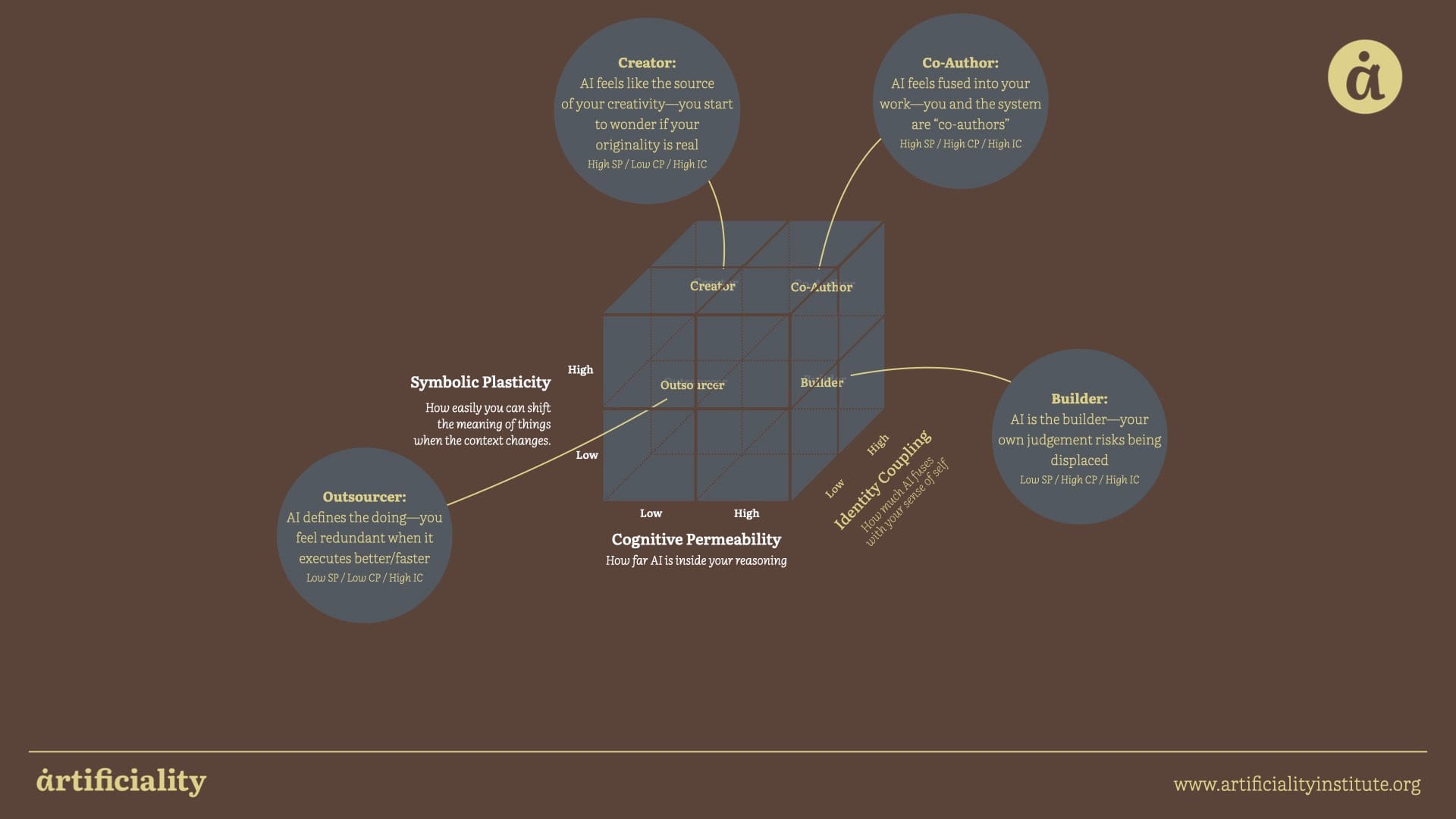

The AI Adaptation Cube maps, in three dimentions, the roles we put AI in based on the three traits: Cognitive Permeability, Symbolic Plasticity, and Identity Coupling.

This article is from our series on Culture and AI, with insights derived from our research program, How We Think and Live with AI: Early Patterns of Human Adaptation. People are forming psychological relationships with AI systems that feel unprecedented to them. Our research maps the psychological changes happening as people incorporate AI into their thinking, creativity, and daily relationships.

Through workshop observations of over 1,000 people, informal interviews, and analysis of first-person online accounts, we observe humans developing three key psychological orientations toward AI: how far AI is inside someone's reasoning (Cognitive Permeability), how closely their identity becomes entangled with AI interaction (Identity Coupling), and their capacity to shift the meaning of things when the context changes (Symbolic Plasticity).

We use the AI Collaboration Cube to map eight distinct modes of human-AI partnership—from Doer (where AI handles grunt work) to Co-Author (where AI becomes fused into your creative process).

AI is part of everyday life now. Productivity is the corporate metric, but the real story is adaptation. We see profound changes in how people attend to each other—and in how that attention shows up—once AI is part of the exchange. The same system can strengthen trust in one moment and weaken it in the next, depending on the role we assign it.

Take the AI notetaker. When someone uses it to take notes so they can focus on you, and they make their intention about this clear, AI is a Doer. It captures notes so they can stay with the conversation. Their presence feels intact, and the AI sits in the background. But when the person shifts the role they place AI in to do the listening for them, the meaning changes. They lean on the system as if it carries the role of listener, reducing themselves to a supervisor making sure the AI delivers. You notice the difference immediately—they seem less present, their attention shifted elsewhere. We call this role Outsourcer, and this essay explores how it reshapes the social fabric and, in turn, our culture.

When someone seems less present, we get suspicious. Human brains evolved for this kind of detection, so we catch the signals almost instantly. We ask: Are they listening? Do they care about this? Where are they in this conversation? That’s our theory of mind at work—the human habit of guessing what others think and intend. By now, few of us care about efficiency. What matters is the human side. We’re looking for authorship and intention—how the chosen role changes the trust we extend to one another.

These are patterns we can name. They emerge from three traits that shape every role. Cognitive Permeability is how much you let AI into your thinking. Symbolic Plasticity is how easily you change the frame when the context shifts. Identity Coupling is how far you let AI blend into your sense of self.

Each trait brings advantages as well as risks. Higher CP can sharpen analysis, surface patterns sooner, and shorten the path from signal to judgment. Higher SP helps you reframe fast, see new options, and design around context instead of forcing it to fit. Higher IC can expand confidence and range—you can take on work that once felt out of reach—so long as you stay clear about authorship.

Together, these traits explain why the same tool can feel different in use. A notetaker at low IC remains a Doer: the human still owns the act. At high IC, the same tool becomes an Outsourcer: the human seems to hand away presence and authorship—unless they declare the boundary and keep presence visible. A brainstorming system at low IC acts as a Catalyst, sparking ideas while you stay in charge. At high IC it becomes a Creator, which can unlock range and speed when you set clear criteria for what is yours.

This is what we sense in each other, even if we don’t name it. We're tracking where the thinking happens, who is doing the framing, and who is authoring. When those signals aren't clear, we guess. Our guesses are contagious and soon shape group norms, trust, and culture.

Our theory of mind runs in the background all the time. We watch each other for signs of attention and ownership, then we build a story about who is thinking, who is deciding, and who is responsible. When AI enters the scene, that story changes quickly—because the person changes the role they give the AI. The tool stays the same; the role shifts; the room recalibrates.

We've mapped these in three dimensions and we call it the AI Adaptation Cube. The Cube gives us words for those roles—Doer, Outsourcer, Catalyst, Creator, Framer, Builder, Partner, Co-Author—and the traits behind them (CP, SP, IC). With a shared language, we can declare the role we’re using, rather than forcing others to guess. That simple act keeps presence visible, keeps authorship clear, and keeps trust intact. It also lets us design culture on purpose: we can agree on when to use each role, how to show our work, and how to hand off judgment without handing away identity.

Before identity enters the picture, the Cube starts with four roles shaped by CP and SP. These roles are common starting points, and each offers clear benefits alongside possible blind spots.

Doer. AI handles repetitive work. It reformats spreadsheets, cleans up notes, drafts routine text. The gain is obvious. It's in time saved and attention freed. The risk is subtle—if people rely too heavily on speed, they can lose the understanding that comes from doing. Declared openly, Doer use helps teams see where efficiency is working without confusion about authorship.

Catalyst. AI sparks new ideas. It generates metaphors, suggests design angles, pushes thinking in fresh directions. The benefit is range and play, the ability to move quickly through more possibilities. The risk is mistaking novelty for progress. The value grows when people own which sparks they keep and explain why.

Framer. AI sharpens the lens. It highlights trends in a long report, summarizes research, or organizes arguments. The benefit is clarity—decisions come faster because the ground is clearer. The risk is assuming a polished frame is the right frame. The opportunity comes when people stress-test the frame together and name what still might be missing.

Partner. AI collaborates in the flow of work. It helps draft plans, edit documents, or shape briefs. The gain is speed and refinement through back-and-forth. The risk is blurred criteria if the team loses track of whose voice sets direction. Declaring Partner use helps preserve accountability while keeping the benefits of collaboration.

These four roles already explain why adoption looks uneven. Some produce measurable ROI, others shift culture and energy in harder-to-measure ways. Each role has real benefits when used with awareness.

The Cube deepens when identity enters. Doer can become Outsourcer, Catalyst becomes Creator, Framer becomes Builder, and Partner becomes Co-Author. Each shift brings both risk and possibility, and how they play out depends on whether the role is declared.

Doer → Outsourcer. At Doer, AI clears routine work so presence and judgment stay with the person. The gain is speed without loss of authorship. At Outsourcer, AI takes on more of the identity of the task. This can feel hollow if presence disappears, but it can also free space for more valuable work. Declaring the role makes the difference clear: “I’m letting the notetaker handle transcripts so I can focus here,” versus leaving others to guess.

Catalyst → Creator. At Catalyst, AI expands the idea space. It makes brainstorming faster and more playful. At Creator, the system begins to feel like the source of originality. This can shake confidence, yet it can also expand range and help people express what once stayed stuck in their heads. The opportunity comes when people keep their own practice alive and explain how AI shaped the result.

Framer → Builder. At Framer, AI sharpens your lens. It speeds analysis and surfaces patterns. At Builder, AI starts setting the frame itself. It constructs the way you think about your own reasoning frames. This can lead to over-reliance, but it also stress-tests assumptions and exposes blind spots. When declared, the role can raise the level of reasoning in the room: “AI suggested this frame; here’s where I agree and where I don’t.”

Partner → Co-Author. At Partner, AI collaborates but stays distinct. It helps iterate and refine. At Co-Author, authorship fuses. This raises questions about originality, yet it also enables speed and scope that feel beyond reach alone. The opportunity grows when people describe the process clearly: “AI expanded the draft, I made the final calls.”

These contrasts are where culture is shaped. When roles remain hidden, people fill the gaps with suspicion. When roles are declared, presence and authorship stay visible. The benefit is not only less friction but more shared learning—teams see what works, adapt faster, and build collective intelligence.

We’re still just learning how to use AI. We are in the very early days of finding new ways for people and machines to work together.

How a team uses AI matters more than any single person’s talent. Team intelligence comes from how people share and reason together. Diverse groups that communicate openly make smarter decisions than even their smartest member. AI becomes part of that shared reasoning, so teams need clear guidelines on when and how to use it. Being transparent about what AI did—how it shaped thinking or what was kept and discarded—builds trust and helps others learn. Over time, these shared habits become part of the culture. Teams that integrate AI thoughtfully will gain wisdom together, not just save time.

In short, this is an early experiment. AI won’t make us superhuman on our own but it can help our groups think better.